The Hidden Cost of Kubernetes

Kubernetes is powerful—but it's also expensive when not optimized. Most organizations overspend on K8s infrastructure by 30-50% without realizing it. The culprits? Over-provisioned nodes, resource requests that don't match reality, idle capacity from poor bin-packing, and zero visibility into per-workload costs.

Unlike traditional infrastructure, Kubernetes costs are notoriously hard to track. Cloud provider bills show node costs, but not which applications or teams are consuming resources. Without this visibility, teams default to over-provisioning "just to be safe"—and costs spiral.

Kubernetes FinOps applies cloud cost management principles specifically to K8s environments. It combines technical optimization (rightsizing, spot instances, autoscaling) with organizational practices (cost allocation, showback, accountability) to create sustainable cost efficiency.

Why K8s Costs Spiral Out of Control

Understanding the root causes of Kubernetes cost overruns is the first step to fixing them.

Over-Provisioned Nodes

Teams choose large node types "for safety" without analyzing actual requirements. Result: paying for capacity that's never used.

Inflated Resource Requests

Developers set CPU/memory requests based on guesses, not data. Requests often exceed actual usage by 2-5x, blocking node capacity.

Poor Bin-Packing

Kubernetes scheduler can't efficiently pack pods when requests don't match reality. Nodes run at 20-30% utilization while appearing "full."

Zero Cost Visibility

Cloud bills show node costs, not workload costs. Teams have no idea what their applications actually cost, preventing accountability.

Idle Non-Production Clusters

Dev, staging, and test clusters run 24/7 but are only used during business hours. 70%+ of their cost is pure waste.

Missing Spot Instances

Spot/preemptible instances offer 60-90% discounts but require proper configuration. Most clusters don't use them at all.

Atmosly for Kubernetes FinOps

Our proprietary platform provides the visibility and intelligence needed to optimize Kubernetes costs at scale.

Atmosly gives you what cloud provider tools can't—real-time cost visibility per namespace, workload, and team. Combined with AI-powered rightsizing recommendations, you'll know exactly where to optimize.

Explore Atmosly Cost IntelligenceCluster Inventory

Complete visibility into all your Kubernetes clusters across AWS EKS, GCP GKE, and Azure AKS. Track node types, counts, and utilization in real-time.

Rightsizing Recommendations

AI-powered analysis of resource usage patterns. Get specific recommendations for pod requests/limits and node sizing based on actual utilization data.

Cost Allocation

Break down K8s costs by namespace, label, team, or application. Enable showback and chargeback to drive accountability across engineering teams.

Cost Anomaly Detection

Get alerted when costs spike unexpectedly. Identify runaway workloads, resource leaks, or configuration changes that impact spend before they become budget problems.

Savings Tracking

Track optimization progress over time. See exactly how much you've saved through rightsizing, spot instances, and other optimizations—with full audit trail.

Multi-Cloud Support

Unified K8s cost management across AWS, GCP, and Azure. Compare costs across providers and identify optimization opportunities in all environments.

K8s Cost Challenges We Solve

No Visibility Into Workload Costs

Cloud bills show EC2/GCE/VM costs but not which Kubernetes workloads are consuming them. Teams can't optimize what they can't measure.

Our Solution

Atmosly provides cost allocation by namespace, deployment, and custom labels. Every team sees their actual K8s costs with daily granularity.

Resource Requests vs Reality

Developers set resource requests based on worst-case assumptions. Actual usage is often 20-30% of requested resources, blocking capacity.

Our Solution

Analyze actual usage patterns over time. Provide specific rightsizing recommendations with confidence levels. Implement Vertical Pod Autoscaler for automated optimization.

Idle Cluster Waste

Non-production clusters run 24/7 but are only actively used during business hours. Weekend and overnight capacity is pure waste.

Our Solution

Implement cluster scheduling (scale to zero overnight), namespace hibernation, and auto-pause for dev/test environments. Typical savings: 50-70% on non-prod.

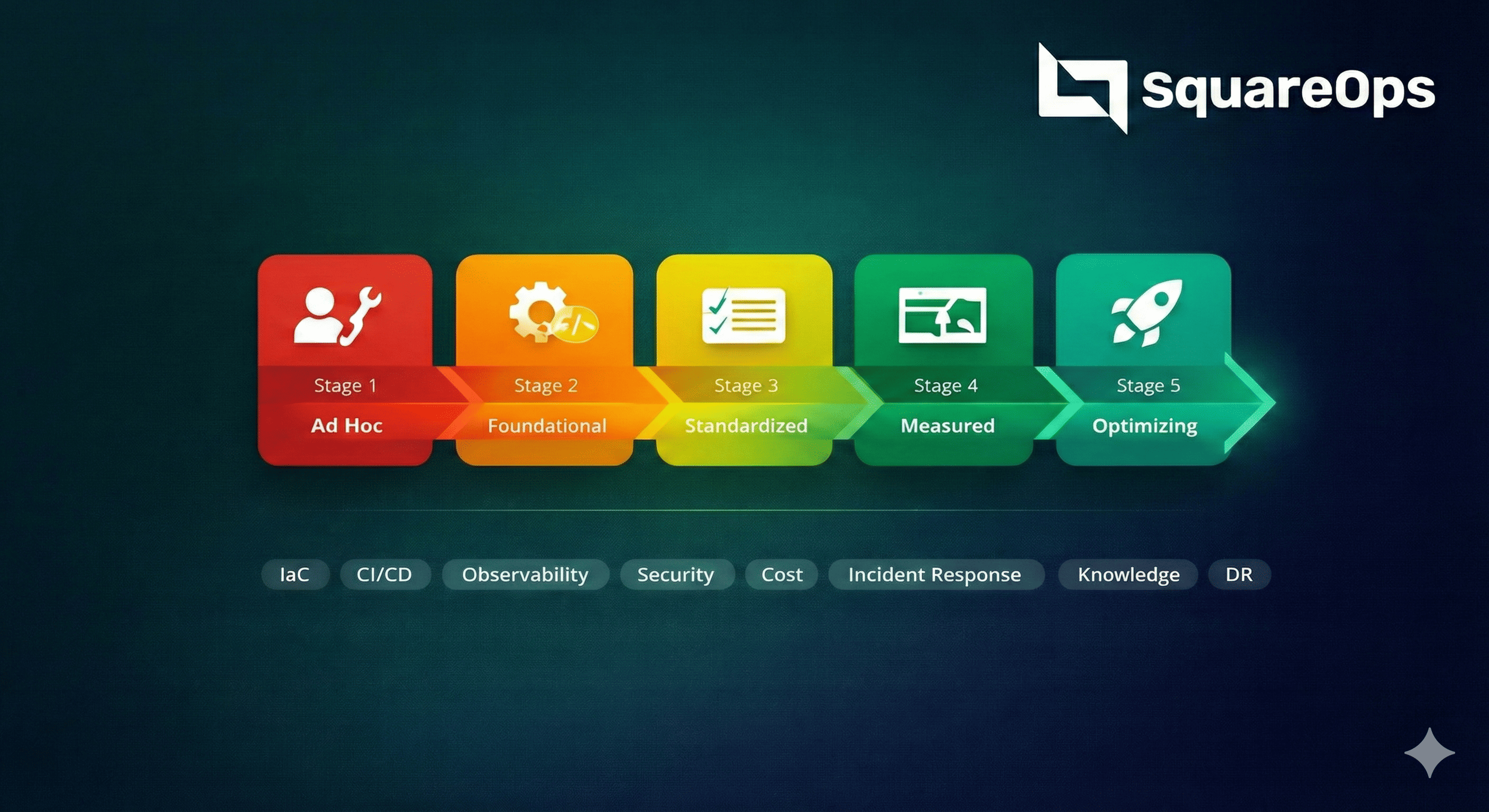

Our K8s Optimization Process

A structured approach to finding and eliminating Kubernetes cost waste.

Discovery & Assessment

Connect Atmosly to your clusters for full inventory. Analyze node types, resource utilization, cost patterns, and identify top optimization opportunities. Deliver baseline cost report.

Quick Wins Implementation

Implement immediate savings: delete unused resources, rightsize obvious over-provisioning, enable spot instances for fault-tolerant workloads. Typical impact: 15-25% savings in week one.

Resource Optimization

Deep analysis of pod resource requests/limits. Implement Vertical Pod Autoscaler or provide rightsizing recommendations per workload. Improve bin-packing efficiency.

Autoscaling & Scheduling

Configure Cluster Autoscaler and Karpenter for efficient node provisioning. Implement HPA for workload scaling. Set up scheduling for non-production environments.

Continuous Optimization

Ongoing monitoring via Atmosly dashboards. Monthly optimization reviews, new recommendation implementation, and cost anomaly response. Sustained savings over time.

How much are you overspending on Kubernetes?

Get a free K8s cost analysis with Atmosly. We'll show you exactly where your money is going and how much you can save.

Get Free AnalysisKubernetes Cost Optimization Strategies

Proven techniques we implement to reduce K8s costs by 30-50%.

Cluster Rightsizing

Analyze node utilization and workload requirements. Right-size node types, optimize instance families, and consolidate under-utilized clusters. Atmosly provides specific recommendations.

Spot/Preemptible Instances

60-90% savings on compute with spot instances. Configure node pools, pod disruption budgets, and graceful handling. Use Karpenter for intelligent spot provisioning.

Resource Request Optimization

Analyze actual CPU/memory usage vs requests. Implement Vertical Pod Autoscaler or provide per-workload rightsizing recommendations. Improve bin-packing efficiency.

Autoscaling Configuration

Properly configured Cluster Autoscaler, Karpenter, and HPA. Scale nodes and pods based on actual demand, not worst-case provisioning.

Non-Production Scheduling

Scale dev/staging clusters to zero overnight and weekends. Implement namespace hibernation. Save 50-70% on non-production environments.

K8s Cost Optimization by Platform

Platform-specific optimizations for maximum savings on each cloud provider.

AWS EKS Optimization

Spot instances with Karpenter, Savings Plans for on-demand, Graviton instances for 20% savings, and EKS-specific cost allocation with AWS expertise.

GCP GKE Optimization

Preemptible VMs, committed use discounts, Autopilot pricing optimization, and GKE-specific node auto-provisioning configuration.

Azure AKS Optimization

Spot node pools, Reserved Instances, AKS-specific autoscaling, and Azure Advisor integration for cost recommendations.