The Gold Standard for Kubernetes Monitoring

Prometheus is the CNCF-graduated monitoring system that has become the de facto standard for Kubernetes observability. Combined with Grafana for visualization and Alertmanager for alert routing, it provides a complete, open-source monitoring stack trusted by organizations from startups to Fortune 500 enterprises.

Unlike proprietary solutions, the Prometheus ecosystem gives you full control over your monitoring data, no per-host licensing fees, and a massive community with thousands of pre-built exporters and dashboards. But setting it up for production requires deep expertise—from high availability configuration to efficient storage management and meaningful alerting.

As part of our monitoring & observability services, we implement production-grade Prometheus stacks that scale with your infrastructure while integrating seamlessly with your DevOps workflows and SRE practices.

Our Prometheus & Grafana Services

End-to-end observability implementation from architecture design to ongoing optimization.

Prometheus Operator Deployment

Production-grade kube-prometheus-stack with HA configuration, proper resource limits, PVC-backed storage, and ServiceMonitor/PodMonitor CRDs for dynamic target discovery.

Custom Grafana Dashboards

Purpose-built dashboards for your applications, infrastructure, and business metrics. We create dashboards that surface actionable insights, not just pretty graphs.

Alertmanager Configuration

Intelligent alert routing with proper inhibition rules, grouping, and silencing. Integration with PagerDuty, Opsgenie, Slack, and custom webhooks. No alert fatigue.

Long-Term Storage (Thanos/Mimir)

Unlimited metric retention with object storage backends (S3, GCS, Azure Blob). Global query view, downsampling, and multi-cluster federation.

Exporter Implementation

Deploy and configure exporters for databases (MySQL, PostgreSQL, MongoDB), message queues (Kafka, RabbitMQ), web servers (nginx, Apache), and custom applications.

PromQL Training

Hands-on training for your team on writing efficient PromQL queries, creating recording rules, and building alerting rules that catch real issues.

Production Architecture Components

A properly architected Prometheus stack includes multiple components working together.

We deploy battle-tested architectures that handle millions of time series while maintaining sub-second query performance.

Prometheus Server

Scrapes metrics from targets, stores time series data, and evaluates alerting rules. We configure proper retention, WAL settings, and memory limits based on cardinality analysis.

Grafana

Visualization layer with dashboards, exploration tools, and alerting. We configure SSO (OIDC/SAML), provisioned dashboards via GitOps, and proper RBAC for multi-team access.

Alertmanager

Handles alert deduplication, grouping, inhibition, silencing, and routing to receivers. Critical for avoiding alert storms and ensuring the right people get notified.

Node Exporter

Exposes hardware and OS metrics from Linux hosts—CPU, memory, disk I/O, network, filesystem. Essential for infrastructure-level visibility.

kube-state-metrics

Generates metrics about Kubernetes objects—deployments, pods, nodes, PVCs. Critical for understanding cluster state and resource utilization.

Thanos / Mimir

Provides horizontal scaling, long-term storage on object storage, global query view, and high availability. Essential for production workloads.

Monitoring Challenges We Solve

High Cardinality & Memory Issues

Prometheus OOM crashes due to unbounded label cardinality from user IDs, request IDs, or dynamic labels that create millions of unique time series.

Our Solution

Implement cardinality analysis tools, configure proper sample_limit and label_limit, use recording rules to pre-aggregate, and set up alerts for cardinality explosions.

Alert Fatigue & Noise

Teams ignore alerts because they're overwhelmed with false positives, poorly tuned thresholds, and missing context. Critical issues get lost in the noise.

Our Solution

Design symptom-based alerts (not cause-based), implement proper for durations, use inhibition rules, add runbook links, and create tiered severity levels with appropriate routing.

Limited Retention & No HA

Default Prometheus stores 15 days locally with no high availability. Single Prometheus failure means loss of monitoring during incidents.

Our Solution

Deploy Thanos Sidecar or Mimir for unlimited retention on S3/GCS, implement Prometheus HA pairs with deduplication, and configure global query across all instances.

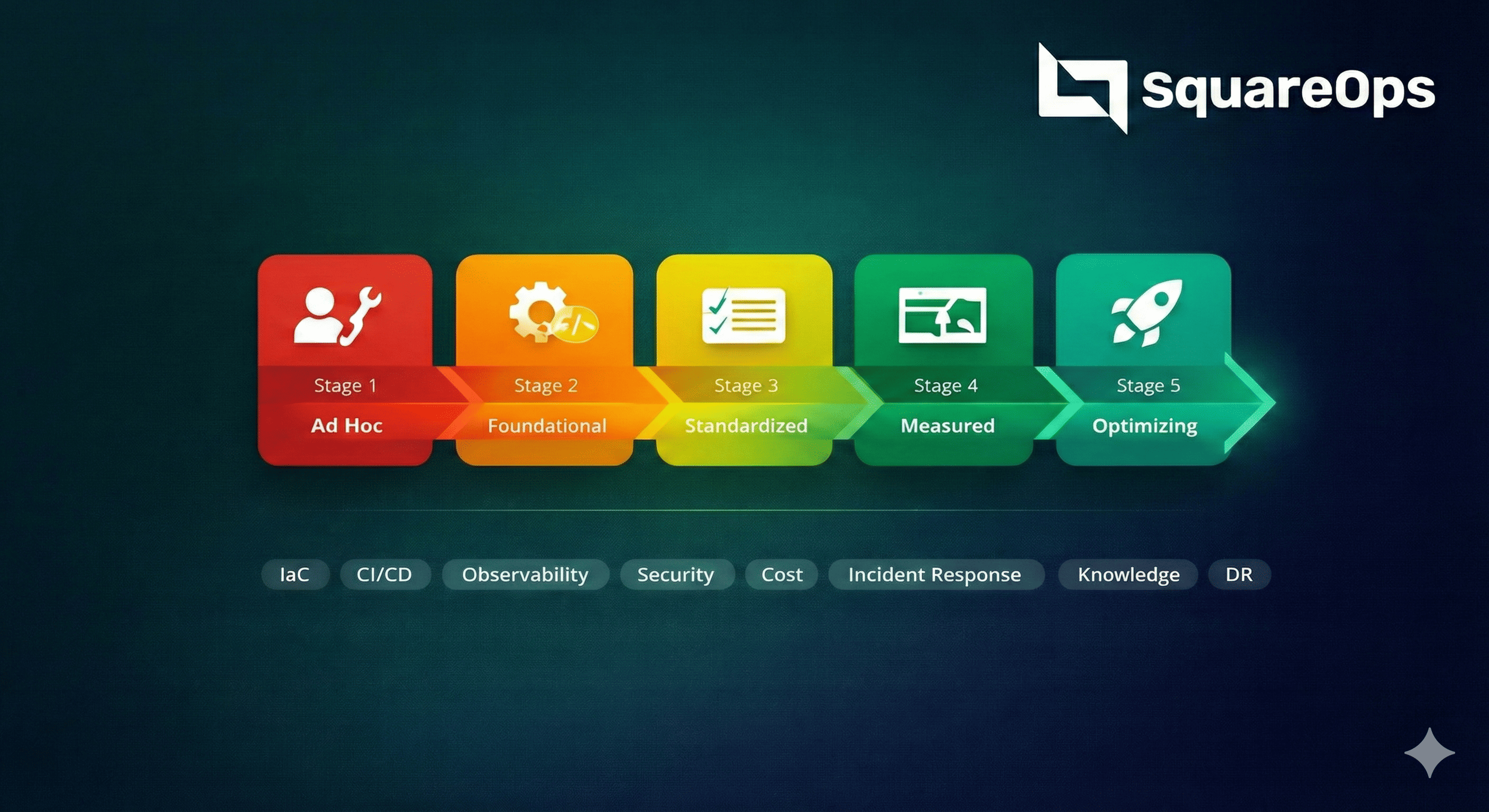

Our Implementation Process

A structured approach to deploying production-grade observability.

We've implemented Prometheus stacks for 100+ organizations. Our methodology ensures you get value quickly while building a foundation that scales.

Discovery & Assessment

Audit existing monitoring, identify metrics requirements, assess infrastructure scale (nodes, pods, services), and estimate cardinality. Define SLIs/SLOs for critical services.

Architecture Design

Design Prometheus topology (single vs. federated vs. Thanos), storage requirements, retention policies, HA configuration, and integration points with existing tools.

Stack Deployment

Deploy kube-prometheus-stack via GitOps with production configurations. Set up Grafana with SSO, configure Alertmanager receivers, and deploy required exporters.

Dashboards & Alerts

Create custom dashboards for your applications and infrastructure. Implement USE/RED method metrics. Configure meaningful alerts with runbooks and proper routing.

Training & Handover

Train your team on PromQL, dashboard creation, and alert management. Document architecture decisions, provide runbooks, and optionally provide ongoing support.

Need Production-Grade Monitoring?

Get a free assessment of your current monitoring setup and a roadmap to observability excellence.

Schedule ConsultationLong-Term Storage: Thanos vs. Mimir

Both solutions solve Prometheus's storage limitations. We help you choose the right one.

Thanos

CNCF incubating project. Sidecar approach for existing Prometheus. Components: Sidecar, Store Gateway, Compactor, Query, Ruler. Excellent for gradual adoption without replacing Prometheus.

Best for: Existing Prometheus deployments, multi-cluster with independent teams, gradual migration path.

Grafana Mimir

Grafana Labs' scalable TSDB. Native multi-tenancy, horizontal scaling, and Prometheus-compatible API. Components: Distributor, Ingester, Querier, Compactor, Store Gateway.

Best for: Greenfield deployments, multi-tenant platforms, massive scale (billions of active series).

Amazon Managed Prometheus

AWS-managed service compatible with Prometheus. Zero infrastructure management, automatic scaling, integrated with AWS services. Pay per metrics ingested.

Best for: AWS-native shops, teams wanting minimal ops overhead, compliance requirements needing managed services.

Google Cloud Managed Prometheus

GCP-managed Prometheus service with global query capabilities. Integrates with GKE and Cloud Monitoring. Prometheus-compatible with added GCP features.

Best for: GCP-native environments, teams using Cloud Monitoring, hybrid cloud setups.

Essential PromQL Patterns We Implement

Well-crafted queries and recording rules are the foundation of effective monitoring.

Error Rate

RED MethodTrack request error rates per service for rapid issue detection

sum(rate(http_requests_total{status=~"5.."}[5m]))

/

sum(rate(http_requests_total[5m]))Latency Percentiles

P99 HistogramCalculate P99 latency using histogram buckets

histogram_quantile(0.99,

sum(rate(http_request_duration_seconds_bucket[5m]))

by (le, service)

)CPU Saturation

USE MethodDetect container CPU throttling before it impacts performance

sum(increase(container_cpu_cfs_throttled_periods_total[5m]))

/

sum(increase(container_cpu_cfs_periods_total[5m]))Memory Pressure

OOM PreventionAlert when container memory approaches limits (90% threshold)

container_memory_working_set_bytes

/

container_spec_memory_limit_bytes > 0.9Disk Prediction

PredictivePredict disk exhaustion 4 hours in advance using linear regression

predict_linear(

node_filesystem_avail_bytes[6h],

4*3600

) < 0SLO Burn Rate

Multi-WindowMulti-window burn rate alerting for SLO-based monitoring

1 - (

sum(rate(http_requests_total{status!~"5.."}[1h]))

/

sum(rate(http_requests_total[1h]))

) > (14.4 * 0.001)Exporters We Deploy & Configure

Prometheus exporters for comprehensive infrastructure and application visibility.

Database Exporters

MySQL Exporter: Connections, queries, InnoDB metrics, replication lag

PostgreSQL Exporter: Connections, transactions, locks, replication

MongoDB Exporter: Operations, connections, replication, sharding

Redis Exporter: Memory, commands, keyspace, persistence

Queue Exporters

Kafka Exporter: Consumer lag, partition offsets, broker metrics

RabbitMQ Exporter: Queue depth, message rates, connections

SQS Exporter: Queue size, message age, DLQ metrics

NATS Exporter: Connections, messages, subscriptions

System Exporters

Node Exporter: CPU, memory, disk, network, filesystem

Blackbox Exporter: HTTP, TCP, ICMP, DNS probes

NGINX Exporter: Connections, requests, response codes

cAdvisor: Container resource usage and performance